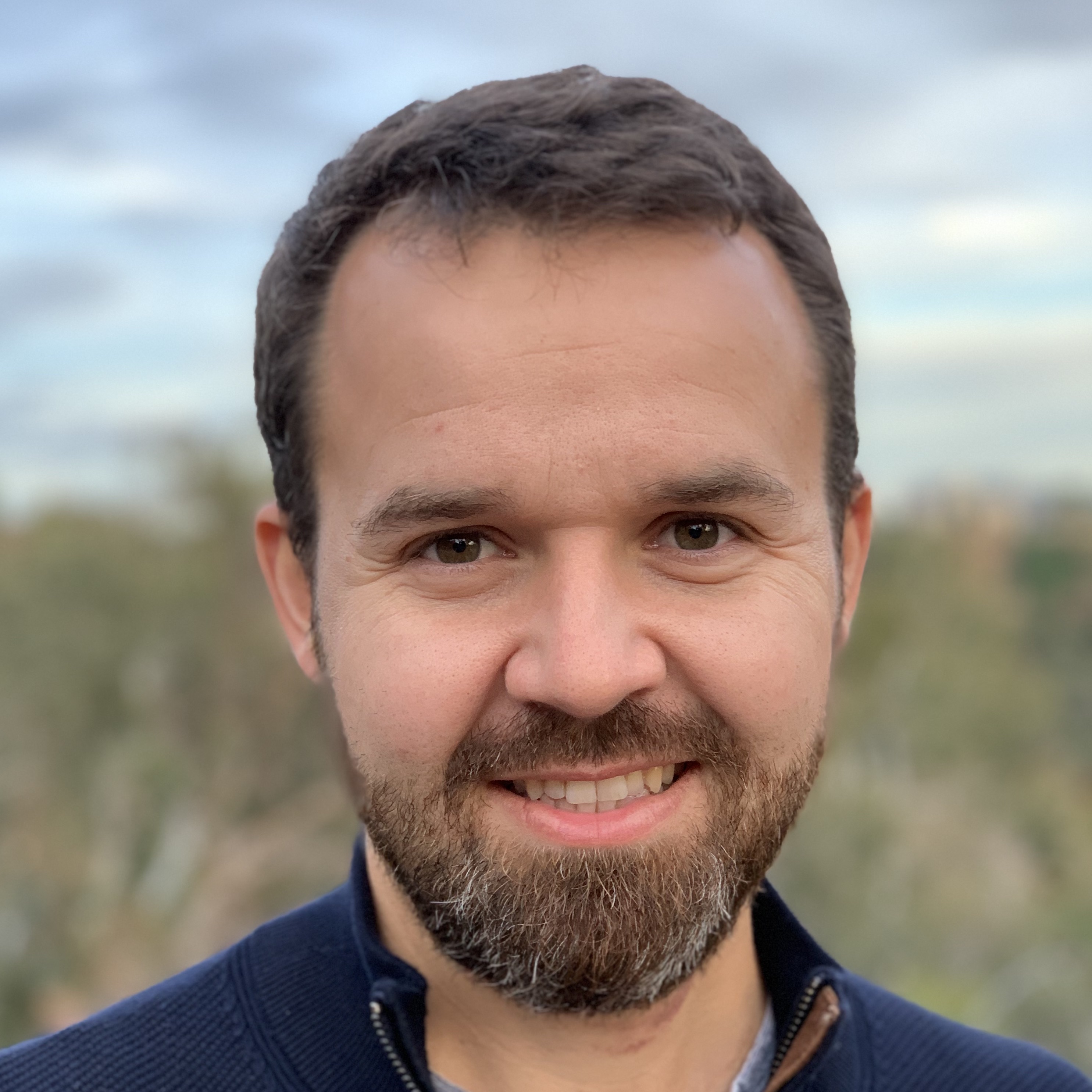

Sergey Tulyakov is a Principal Research Scientist at Snap Inc, where he leads the Creative Vision team. His work focuses on creating methods for manipulating the world via computer vision and machine learning. This includes human and object understanding, photorealistic manipulation and animation, video synthesis, prediction and retargeting. He pioneered the unsupervised image animation domain with MonkeyNet and First Order Motion Model that sparked a number of startups in the domain. His work on Interactive Video Stylization received the Best in Show Award at SIGGRAPH Real-Time Live! 2020. He has published 30+ top conference papers, journals and patents resulting in multiple innovative products, including Snapchat Pet Tracking, OurBaby, Real-time Neural Lenses (gender swap, baby face, aging lens, face animation) and many others. Before joining Snap Inc., Sergey was with Carnegie Mellon University, Microsoft, NVIDIA. He holds a PhD degree from the University of Trento, Italy.

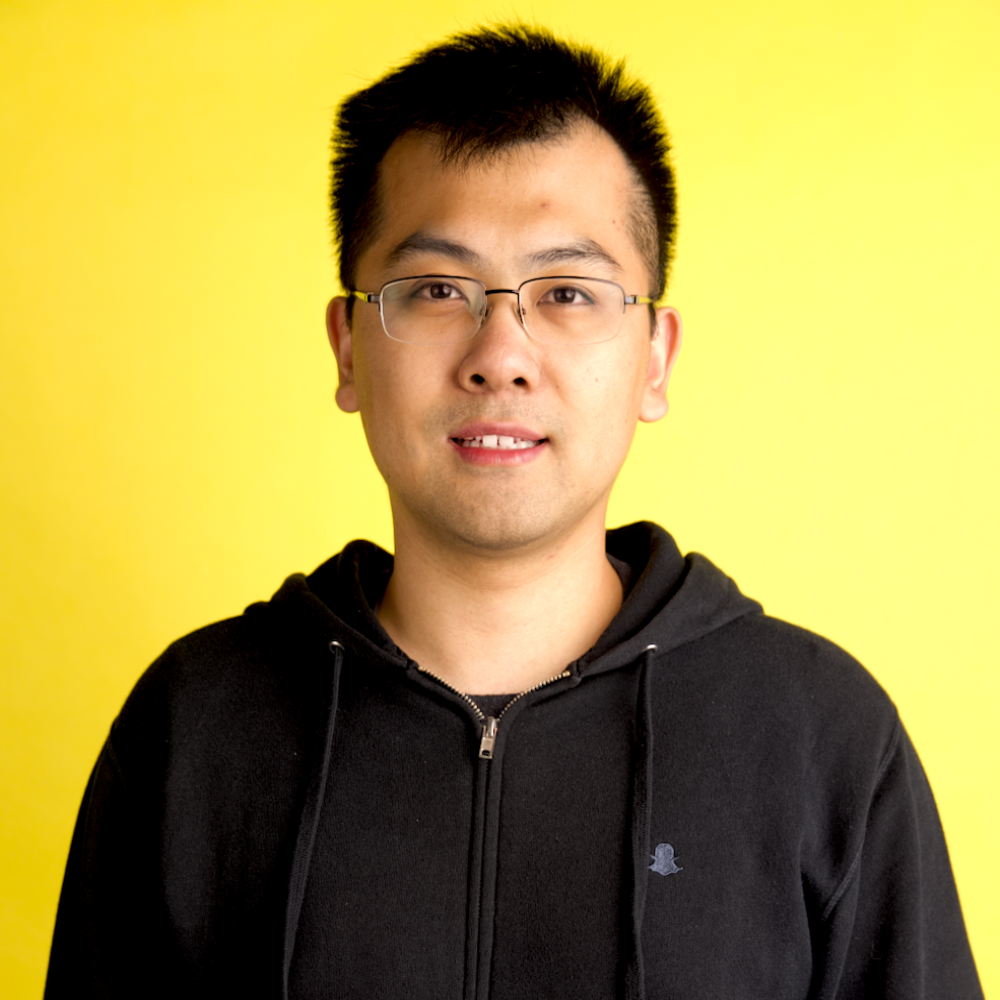

Jian Ren is a Research Scientist in the Creative Vision team at Snap Research. He got Ph.D. in Computer Engineering from Rutgers University in 2019. He is interested in image and video generation and manipulation, and efficient neural networks. Before joining Snap Inc, Jian did internships in Adobe, Snap, and Bytedance.

Stéphane Lathuilière is an associate professor (maître de conférence) at Telecom Paris, France, in the multimedia team. Until October 2019, he was a post-doctoral fellow at the University of Trento in the Multimedia and Human Understanding Group, led by Prof. Nicu Sebe and Prof. Elisa Ricci. He received the M.Sc. degree in applied mathematics and computer science from ENSIMAG, Grenoble Institute of Technology (Grenoble INP), France, in 2014. He completed his master thesis at the International Research Institute MICA (Hanoi, Vietnam). He worked towards his Ph.D. in mathematics and computer science in the Perception Team at Inria under the supervision of Dr. Radu Horaud, and obtained it from Université Grenoble Alpes (France) in 2018. His research interests cover machine learning for computer vision problems (eg. domain adaptation, continual learning) and deep models for image and video generation. He published papers in the most prestigious computer vision conferences (CVPR, ICCV, ECCV, NeurIPS) and top journals (T-PAMI).

Aliaksandr Siarohin is a Research Scientist working at Snap Research in the Creative vision team. Previously, he was a Ph.D Student at the University of Trento where he worked under the supervision of Nicu Sebe at the Multimedia and Human Understanding Group (MHUG). His research interests include machine learning for image animation, video generation, generative adversarial networks and domain adaptation. His works have been published in top computer vision and machine learning conferences. He also did internships at Snap Inc. and Google. He was a Snap Research Fellow of 2020.