Promptable Game Models (PGMs)

We propose Promptable Game Models, a framework for controllable video generation and editing. Our PGM is trained to respond to prompts, i.e. to generate videos satisfying a set of user constraints expressed as textual actions or desired states of the environment. Our learned model can synthesize scenes with explicit control on pose of articulated objects, their position, camera and style.

Playing with PGMs

To answer prompts, our model learns a representation of physics and of the game logic. The user can generate results by issuing actions. To unlock a high degree of expressiveness and enable fine-grained action control, we make use of text actions.

Director's mode with PGMs

The knowledge about physics and the game logic enables our model to perform complex reasoning on the scene and enables the "director's mode" where high-level constraints or objectives can be specified in the form of prompts, and the model generates sequences containing complex action sequences that satisfy those constraints.

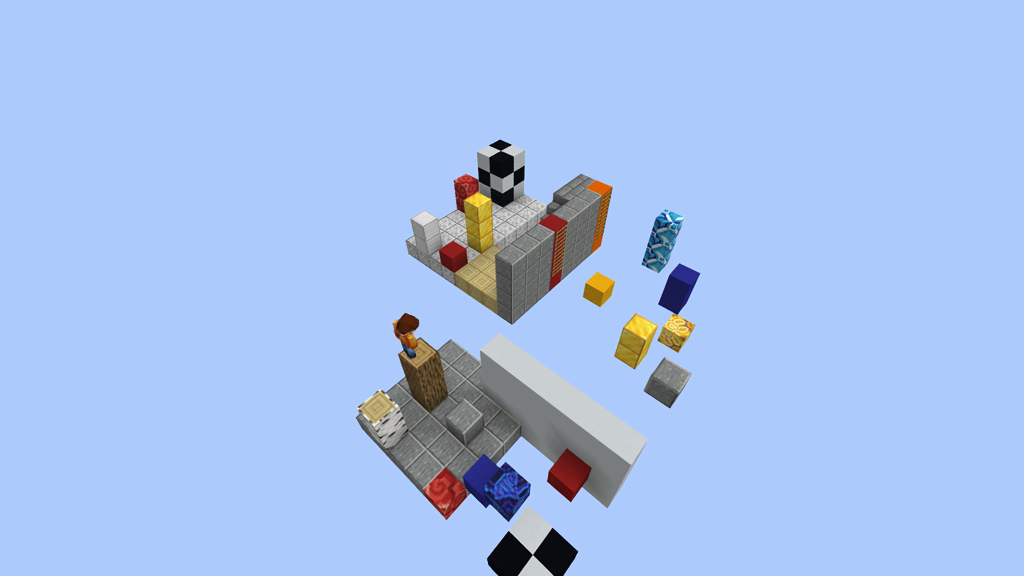

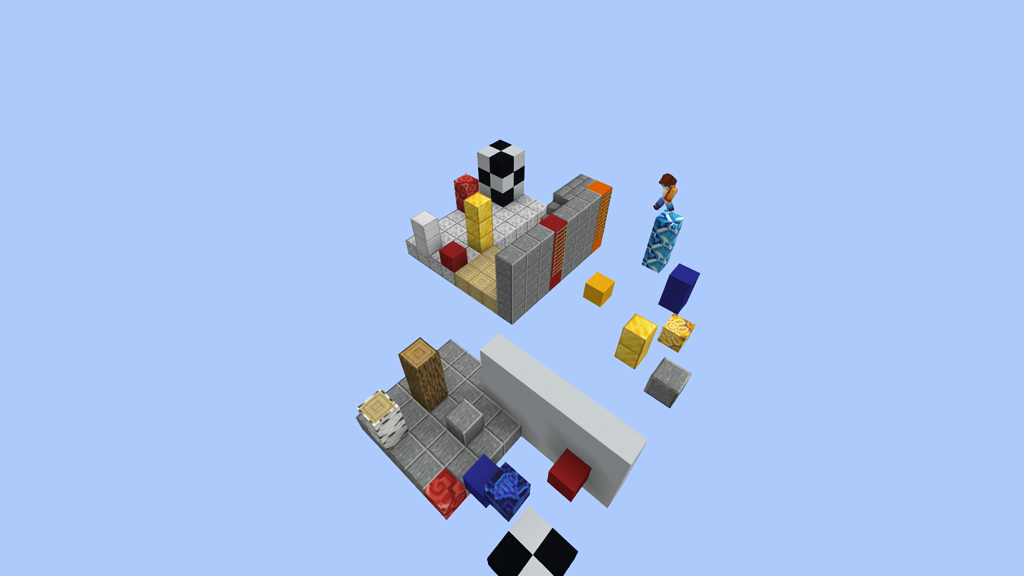

As a simple example, our model can be given an initial and final state and generate all the trajectory in the middle:

Initial state

Final state

Generated Videos

If a further conditioning action is given in the middle of the video, the model generates a completion satisfying also the additional constraint and changes the path of the player accordingly:

More conditioning actions can be inserted at different times to generate multiple waypoints:

The model can be asked to perform complex reasoning such as which actions to take to win a point. To do so, the user can condition the top player with the action that he should "not catch the ball" at the end of the sequence:

Original Video

= Bottom player loses

1/2 Original video

+ "The [top] player does not catch the ball"

= Bottom player wins

PGM Datasets

To enable learning promptable models of games, we build two dataset with camera calibration, 3D player poses, 3D ball localization and fine-grained text actions for each player and each frame: